It’s strange. openSUSE defaults to one partition for the system (BTRFS) and one for the data (ext4 or XFS). Besides, it is the best way to avoid unnecessary risks in case of losing a partition and to control the available space much better.

My recommendation would of course be to have separate partitions for system and data (/home), because since you are using SSD, to optimise space and reduce the deterioration of your SSD, you should ideally use a feature of the BTRFS system, which is on-the-fly compression. You won’t notice anything except that you will have more space available and your SSD will suffer less (less data is written).

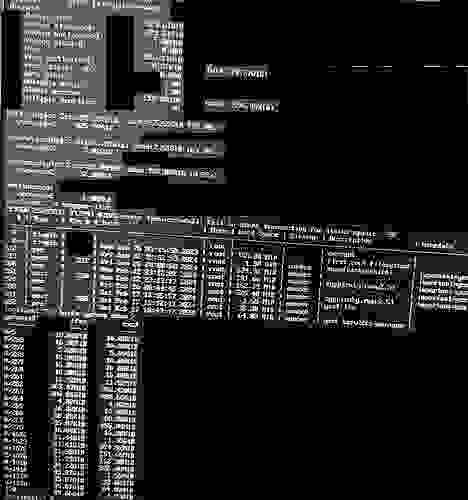

Here you can see I have in my /etc/fstab a parameter “compress” set to the highest compression possible for some of my system BTRFS subvolumes.

UUID=0ad79315-b215-4f5c-b526-cf0187e60298 /var btrfs subvol=/@/var,compress=zstd:12 0 0

UUID=0ad79315-b215-4f5c-b526-cf0187e60298 /usr/local btrfs subvol=/@/usr/local,compress=zstd:12 0 0

UUID=0ad79315-b215-4f5c-b526-cf0187e60298 /tmp btrfs subvol=/@/tmp,compress=zstd:12 0 0

UUID=0ad79315-b215-4f5c-b526-cf0187e60298 /srv btrfs subvol=/@/srv,compress=zstd:12 0 0

UUID=0ad79315-b215-4f5c-b526-cf0187e60298 /root btrfs subvol=/@/root,compress=zstd:12 0 0

UUID=0ad79315-b215-4f5c-b526-cf0187e60298 /opt btrfs subvol=/@/opt,compress=zstd:12

Open your /etc/fstab file with administrator privileges and enable compression on those BTRFS drives that actually have compressible files, such as /var /usr /opt /svr . In your case, you can do the same with /home BTFRS subvolume. Do NOT compress subvolumes such as /efi or /grub2.

Once you saved fstab with compress parameters added, you can reboot or you can remount units with:

mount -o remount,compress=none /

Since you just enabled compression, only the files you save will be compressed, but you can recompress the subvolumes you have assigned compression to with the command:

btrfs filesystem defragment -rvf -czstd /var /usr /opt /svr /home

(include all subvolumes where you enabled compression).

And finally, you can see how much disk space you are saving after enabling compression:

sudo zypper in compsize # Installing compsize command

sudo compsize -x /